Blog & news

Latest articles & news

Explore our latest resoruces below.

.jpg)

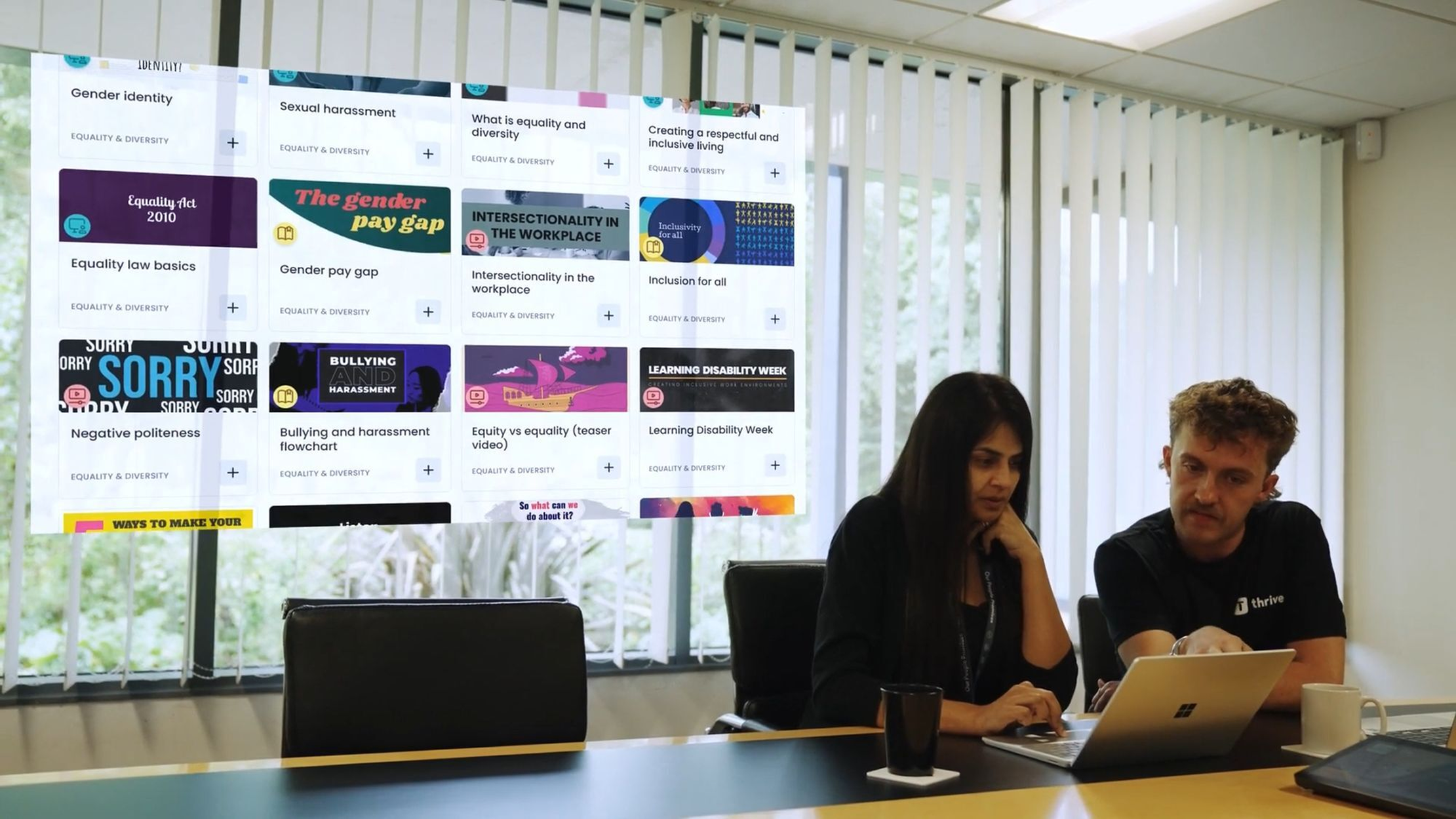

Everything you need to deliver blended learning programmes at scale

Scaling blended learning: A practical roadmap for organisations

Get the latest learning and workplace insights straight to your inbox.

Industry leading analysis

Thank you! Your submission has been received!

Oops! Something went wrong while submitting the form.

Showing 23 articles out of 221.

.svg)

.png)